from sklearn.linear_model import LogisticRegression

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

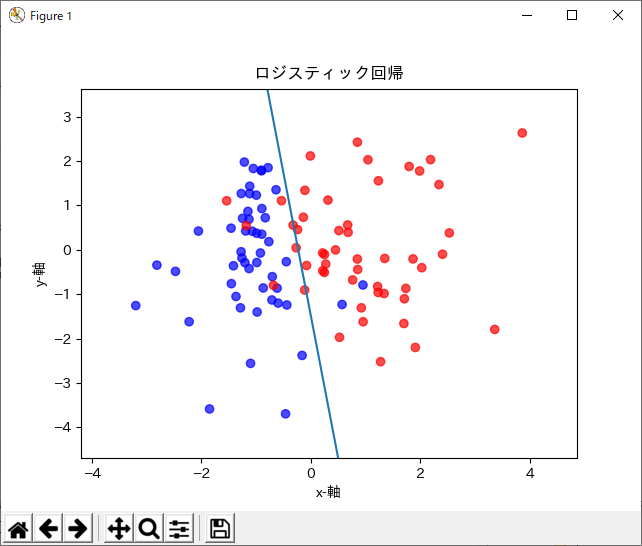

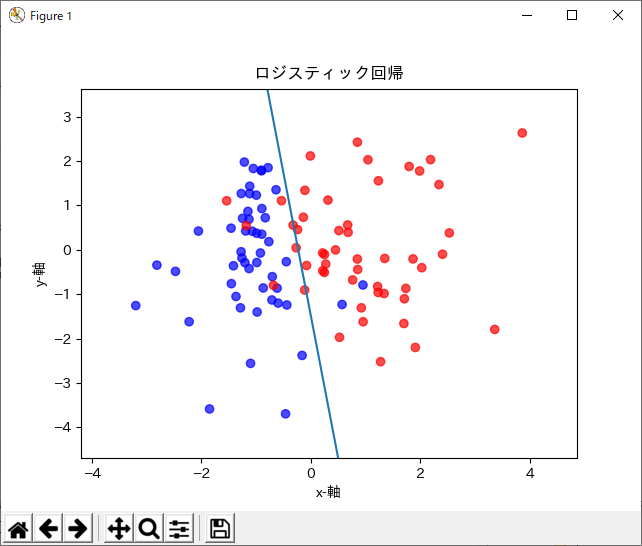

X,y=make_classification(n_samples=100, n_features=2, n_redundant=0, random_state=0)

train_x, test_x, train_y, test_y = train_test_split(X,y,random_state=42)

model=LogisticRegression()

model.fit(train_x, train_y)

print( "coefficient(WβX«): ",model.coef_)

print( "intercept(ΨΠ): ",model.intercept_ )

pred_y = model.predict(test_x)

print( "predicti\ͺlj: ",pred_y )

import matplotlib

import matplotlib.pyplot as plt

import japanize_matplotlib

plt.scatter(X[:, 0], X[:, 1],c=y, cmap=matplotlib.cm.get_cmap(name="bwr"), alpha=0.7)

import numpy as np

Xi=np.linspace(-10,10)

Y=-model.coef_[0][0]/model.coef_[0][1]*Xi - model.intercept_ / model.coef_[0][1]

plt.plot(Xi,Y)

plt.xlim(min(X[:,0])-1, max(X[:, 0])+1)

plt.ylim(min(X[:,1])-1, max(X[:, 1])+1)

plt.title("WXeBbNρA")

plt.xlabel("x-²")

plt.ylabel("y-²")

plt.show()

coefficient(WβX«): [[2.25567275 0.35071038]]

intercept(ΨΠ): [0.52004691]

predicti\ͺlj: [0 0 0 1 0 1 1 1 0 0 0 1 0 0 0 1 1 1 0 0 0 1 1 0 0]